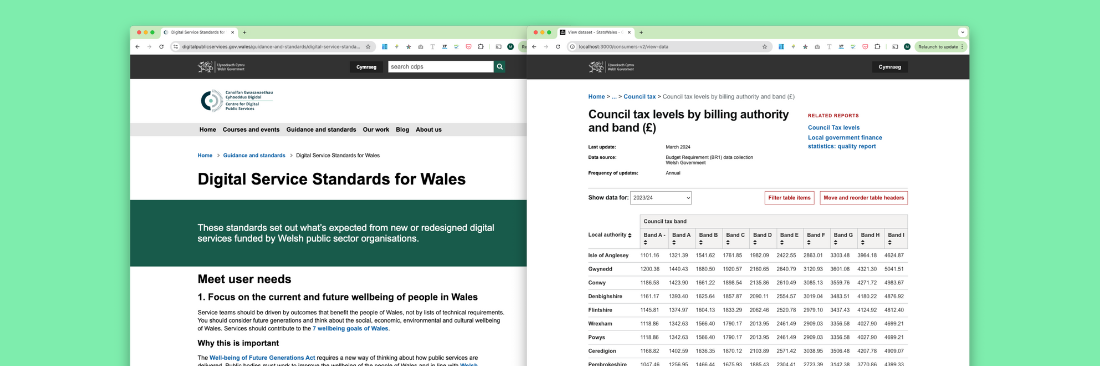

The Centre for Digital Public Services / Canolfan Gwasanaethau Cyhoeddus Digidol (CDPS) enables the digital transformation of public services across Wales. They created the Digital Service Standards for Wales in order to set out what’s expected from digital services funded by the Welsh public sector.

Staying true to their standard of ‘Iterate and improve frequently’, they’re continually reviewing and refining their processes. One area of focus is how to gain the most value out of the Welsh Service Standard – particularly through how they actually assess services against the standards.

While, at Marvell Consulting, we have extensive experience delivering services to a range of government service standards (e.g. the NHS and GOV.UK service standards), StatsWales was our first service delivered to meet the Welsh standards. As such, we nominated ourselves to go through CDPS’s new assessment process.

While it wasn’t an obligation, we recognised that doing so would:

Going through the assessment proved invaluable for both us and CDPS – and as many more teams and services will go through the same process, we thought it would be helpful to share some of our learnings from the experience.

There’s a notable amount of overlap between each organisation’s service standards. However, while each one may be very familiar (e.g. ‘have the right team’, ‘meet user’s needs’ and ‘use the right technology’, etc.), each set of standards exists because each organisation has unique nuances in its contexts and needs. The Welsh Government is no different.

Two particular areas that were new for us to consider were:

Firstly, we needed to ensure we worked closely with organisations across Wales, to help emphasise the impact of StatsWales could spread as widely as possible. This meant building relationships with a range of stakeholders across Wales (for instance, the Government Statistical Service) to ensure we met best practice approaches across Wales. We also adopted an open source, agnostic approach to technology with specific attention paid to the experiences of other organisations in Wales – to ensure the decisions we made could benefit teams and organisations beyond our own.

Secondly, we needed to ensure we designed the service with Welsh language users’ needs in mind for the start. We’d developed numerous bilingual services previously, but this was our first one in Welsh. The primary challenge we found was finding actual users of our specific service, who were Welsh first. In discovery, we’d published a bilingual survey on the existing site to gather feedback and recruit users for research. We, unfortunately, had no respondents using the Welsh form. In Alpha, we redoubled our efforts, reaching out more widely, via stakeholders and industry groups to recruit and engage with Welsh language users, building up a small bank of participants we could draw on throughout the alpha and beyond.

Being a relatively new process, both CDPS and ourselves thought we might be able to take a relatively lightweight approach to the assessment – versus the typical 4+ hour approach often taken in other areas of government. We scheduled 1.5 hours with a panel of experts from CDPS, with a view to run through a demo of the service followed by a discussion through each of the 12 service standard points.

We were proved wrong.

1.5 hours turned out to be nowhere near enough time to discuss a service like StatsWales in the level of detail we, or the panel, wanted (or needed) to. We were keen to share our comprehensive approach taken to delivery, while the assessment panel had their own areas of interest and intrigue they wanted to dig into.

We scheduled an additional 1.5-hour session for later that week.

Within a week, we found out we’d passed the assessment with flying colours. On top of the confirmation that our service was exceeding expectations in a number of areas, we reaffirmed that there’s always room for improvement.

While it’s obviously good to get positive feedback on how you’ve been working and what you’ve been building, the real benefit comes from the guidance on where to focus next. An example for us was the suggestion to engage with other specialist teams in the Welsh Government for further guidance and support.

Also, you can follow all the written guidance and support available (of which the Welsh Government has published plenty) but actually talking with experts in Welsh digital service delivery is infinitely more valuable. The additional nuance and insight our team gained through discussion with both the assessment panel, and Welsh DDaT teams we’d engaged with throughout the project, proved invaluable. These other teams will have inevitably experienced, and solved, similar challenges to us. So it’s far more efficient to learn from their experiences, than simply try and reinvent the wheel ourselves.

Lastly, over the course of the project, any delivery team will develop their own, shared understanding and justification of their processes, decisions and outputs. Having an independent team check your working helps avoid developing an inertia and ensures that you can continually justify your thinking, approach and rationale for the decisions you make.

Broadly speaking, the assessment felt a bit more like doing a retrospective on the project as a whole. Understanding that everyone did the best with what they knew at the time, it ensures we (the delivery team) take a moment to take stock and reflect what we’ve done, how we got there, and where improvements can be made means the next iteration should be even better. We always take this approach internally, holding retrospectives on our projects sprint by sprint, helping us to improve and refine our processes, so it’s also a great opportunity to also reflect and refine on a phase-by-phase basis, with these assessments.

We also greatly appreciated that members of the CDPS assessment panel managed to find the time to engage with us throughout the alpha, rather than just at the assessment, itself. It meant they could provide guidance throughout our delivery and also entered the assessment with a certain level of context, meaning the assessment itself became a far more fruitful conversation for everyone involved. It’s great that CDPS are willing to support our delivery in this way, and we’d recommend any other delivery teams to put themselves forward for the same experience.

You can also read CDPS’s experience of the assessment process in their own blog post. If you’d like to find out a bit more about this project, take a look at our discovery and alpha case studies, or get in touch at hello@marvell-consulting.com.

Whether you’re ready to start your project now or you just want to talk things through, we’d love to hear from you.